Accurate lower-limb joint kinematic estimation is critical for applications such as patient monitoring, rehabilitation, and exoskeleton control. While previous studies have employed wearable sensor-based deep learning (DL) models for estimating joint kinematics, these methods often require extensive new datasets to adapt to unseen gait patterns. Meanwhile, researchers in computer vision have advanced human pose estimation models, which are easy to deploy and capable of real-time inference. However, such models are infeasible in scenarios where cameras cannot be used. To address these limitations, we propose a computer vision-based DL adaptation framework for real-time joint kinematic estimation. This framework requires only a small dataset (i.e., 1–2 gait cycles) and does not depend on professional motion capture setups. Using transfer learning, we adapted our temporal convolutional network (TCN) to stiff knee gait data, allowing the model to further reduce root mean square error by 9.7% and 19.9% compared to a TCN trained on only able-bodied and stiff knee dataset, respectively. Our framework demonstrated a potential for smartphone camera-trained DL model to estimate real-time joint kinematics across novel users in clinical populations with applications in wearable robots.

In this work, we introduce a computer vision-based model adaptation pipeline for lower limb kinematic estimation. Our hypothesis is that vision-extracted kinematics can help personalize the estimation pipeline. We achieve this through transfer learning, where a pre-trained model on general gait patterns is adapted to a new, unseen gait pattern using as little as one to two gait cycles of new data.

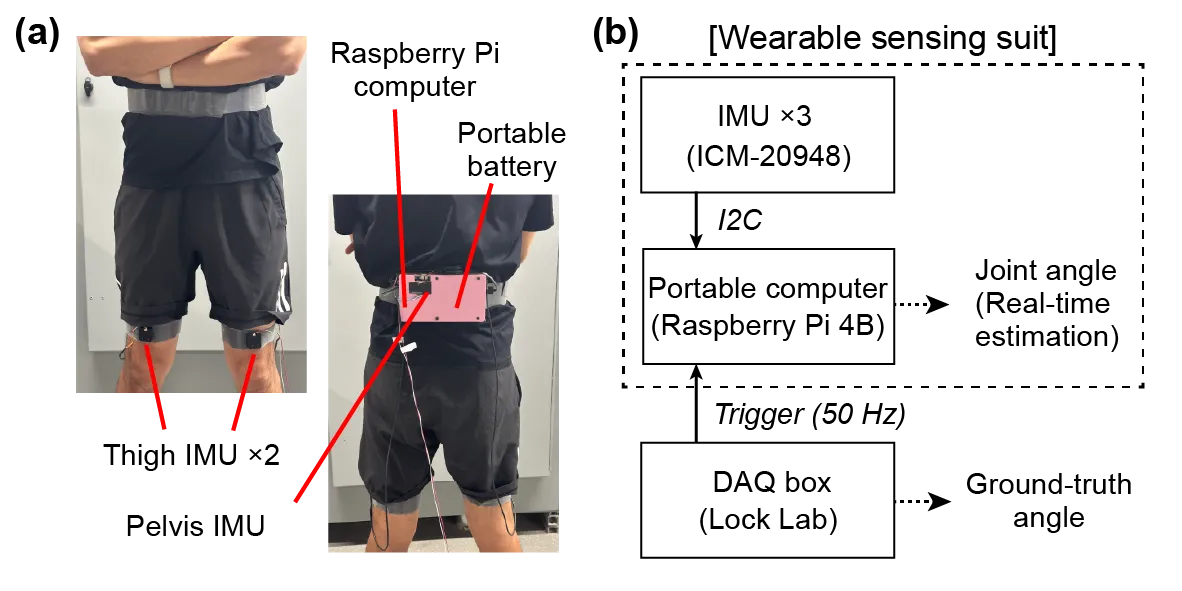

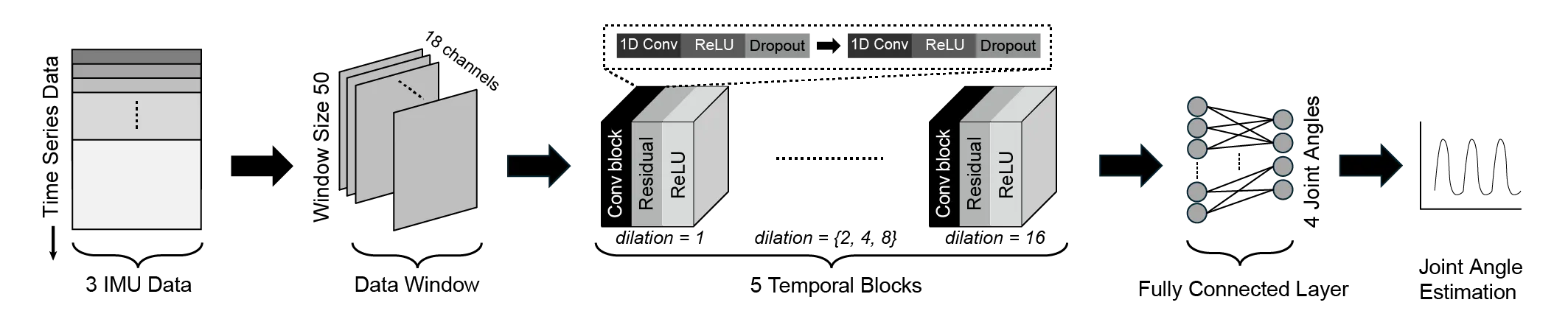

We prototyped a wearable sensing suit that includes a portable computer capable of running inference in real-time. Three IMUs are attached to the pelvis and thighs. To compare our estimated gait data with ground-truth data, we synchronized the wearable system with a data-acquisition box.

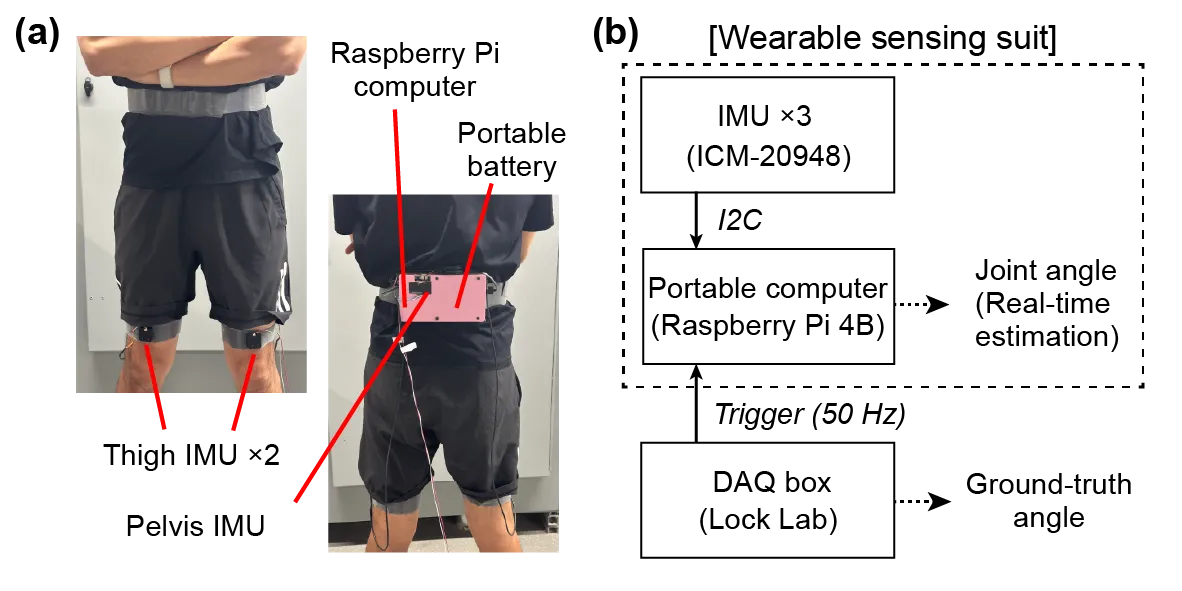

We utilized open-source computer vision models to estimate and calculate joint kinematics. First, we employed YOLO to detect the human subject and define the bounding box. Second, we extracted two-dimensional keypoints with ViTPose, a model known for high estimation accuracy and efficient computation. Then, we reconstructed three-dimensional keypoints using Video-Pose 3D. From these 3D keypoints, we calculated hip and knee angles. A sample plot on the right shows the output of our pose estimation pipeline.

To synchronize with ground-truth data, we used one camera from the marker-less motion capture system. We selected the right-side view camera from six available options, as it offered the lowest angle error. Among the ViTPose models, we chose base as it provided estimations with the lowest estimation error (see Table 1).

| Vision model | Model weights | Model config | Left front | Left side | Left back | Right front | Right side | Right back |

|---|---|---|---|---|---|---|---|---|

| ViTPose - small | weights | config | 16.30 | 10.01 | 10.88 | 12.75 | 9.66 | 10.82 |

| ViTPose - base | weights | config | 16.50 | 10.03 | 10.74 | 13.04 | 9.60 | 10.53 |

| ViTPose - large | weights | config | 16.46 | 10.07 | 10.90 | 13.64 | 9.79 | 10.41 |

| ViTPose - huge | weights | config | 16.40 | 10.89 | 10.81 | 13.89 | 9.94 | 10.50 |

We used computer vision model to estimate the pose of irregular gait patterns, specifically a stiff-knee (SK) gait. We simulated the SK gait by having the subject wear a knee brace on the right leg, resulting in an asymmetrical, knee-constrained gait pattern.

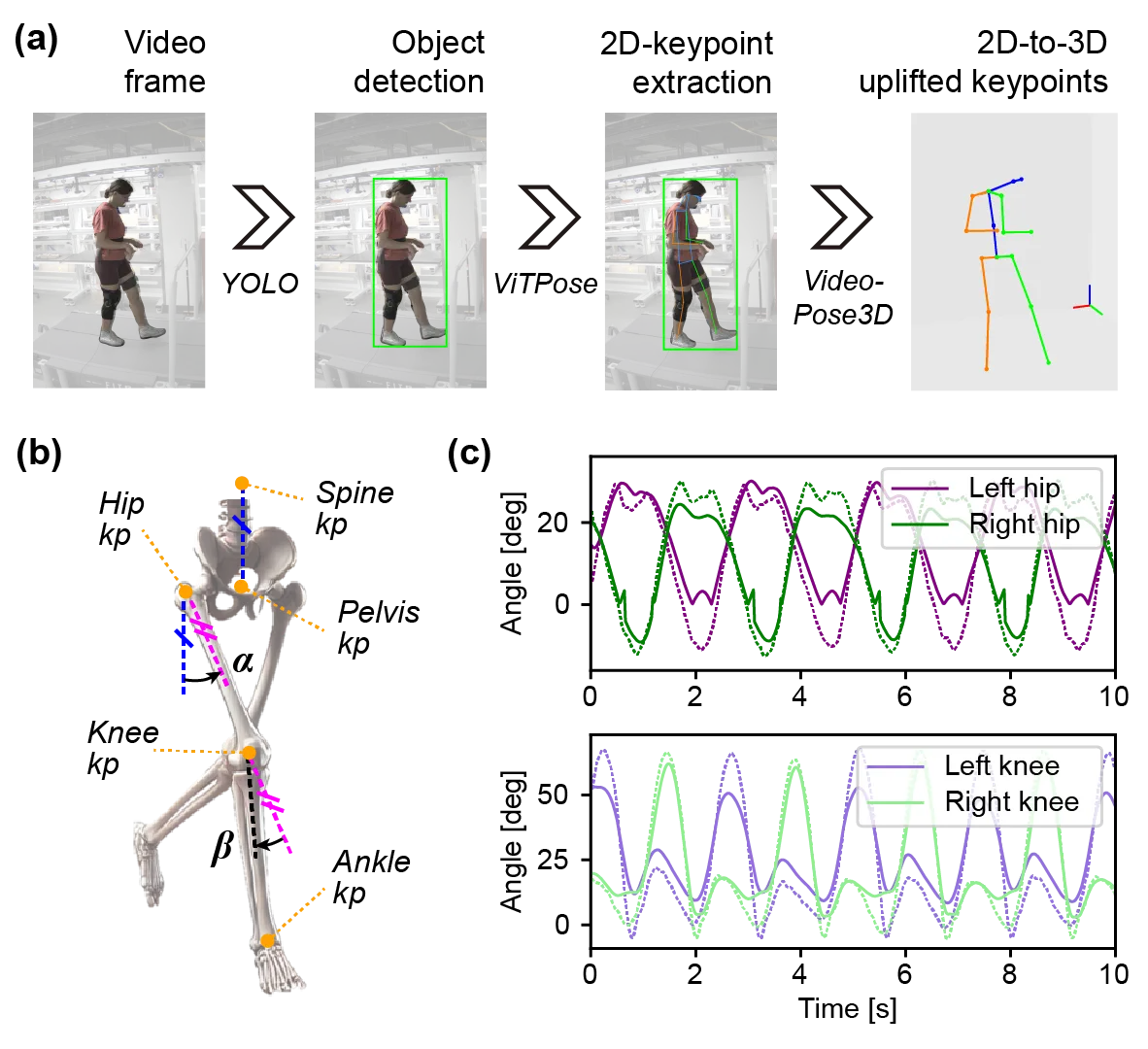

We utilized a Temporal Convolutional Network (TCN) to estimate joint angles from IMU inputs. TCNs are widely used in the exoskeleton field due to their robustness with time-series data. We used a window size of 50 and built five temporal blocks within the TCN architecture.

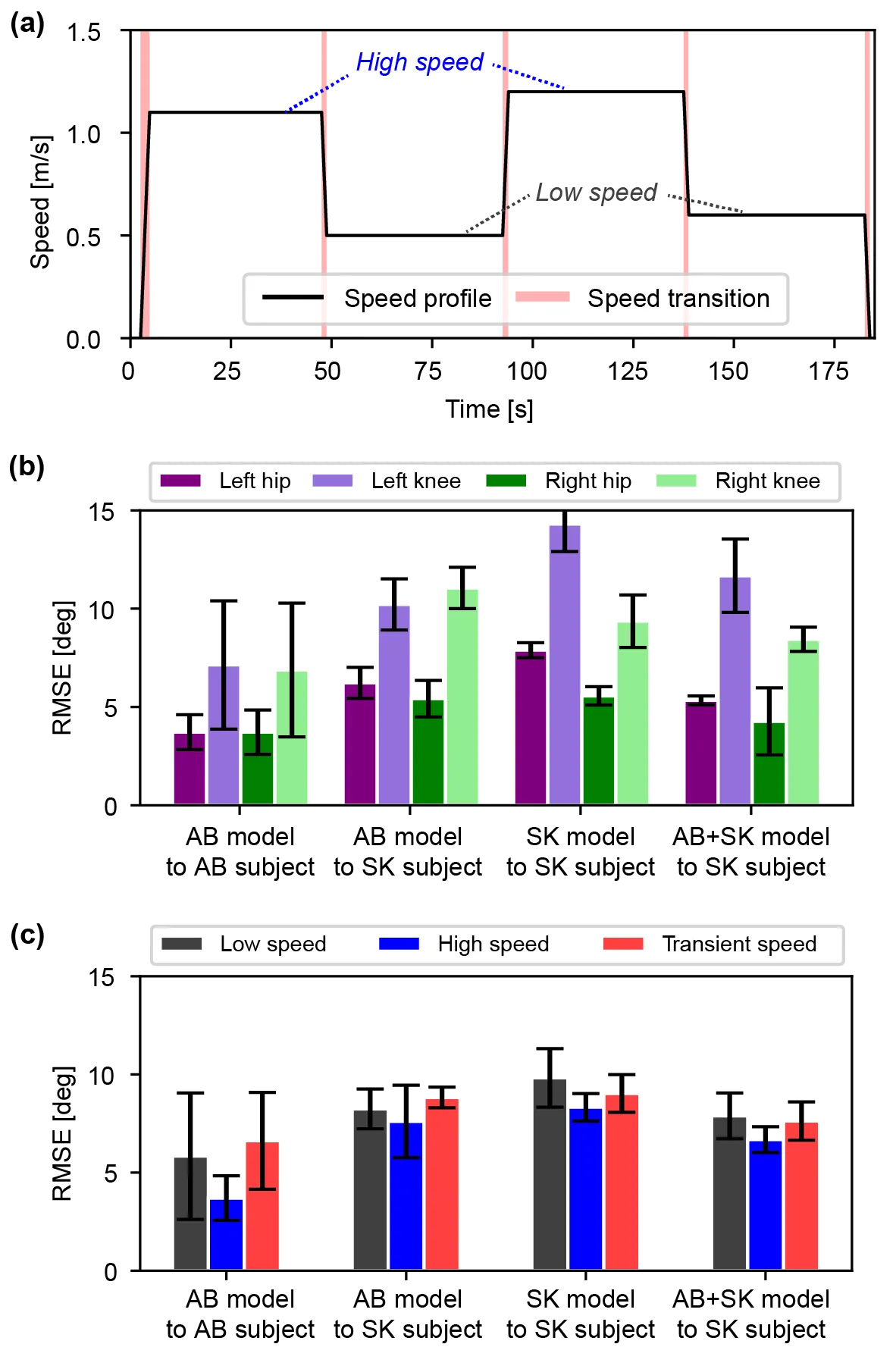

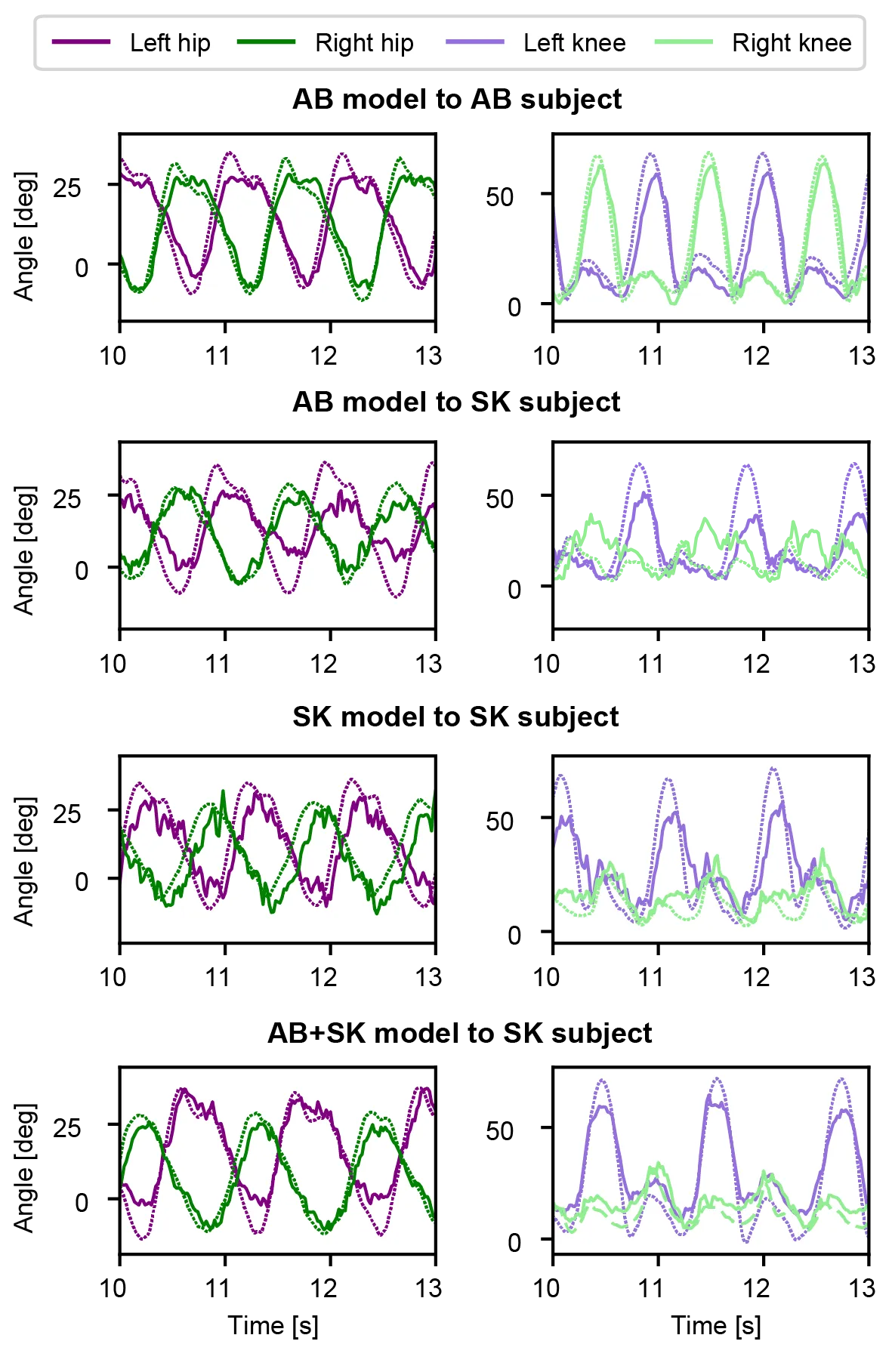

We first trained a TCN model (the AB model) on data from three able-bodied subjects walking at four different speeds. Afterwards, we adapted this model to handle irregular gait patterns, specifically a stiff-knee (SK) gait. We simulated the SK gait by having the subject wear a knee brace on the right leg, resulting in an asymmetrical, knee-constrained gait pattern.

We investigated how much new data was required for transfer learning to the SK gait. We discovered that using just 6% of the SK dataset—equivalent to about one or two gait cycles—was sufficient for the estimation accuracy to converge. We then used this 6% subset to train the AB+SK model that was used in subsequent validation experiments.

Our real-time validation experiments included both AB and SK subjects. The adapted AB+SK model improved estimation accuracy by about 10% compared to the AB-only model on SK subjects, and by about 20% compared to the SK-only model. This improvement remained consistent across different speed conditions, including low, high, and even transient speeds.

@misc{song2024personalizationwearablesensorbasedjoint,

title={Personalization of Wearable Sensor-Based Joint Kinematic Estimation Using Computer Vision for Hip Exoskeleton Applications},

author={Changseob Song and Bogdan Ivanyuk-Skulskyi and Adrian Krieger and Kaitao Luo and Inseung Kang},

year={2024},

eprint={2411.15366},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2411.15366},

}

This work was supported in part by the Kwanjeong Educational Foundation.